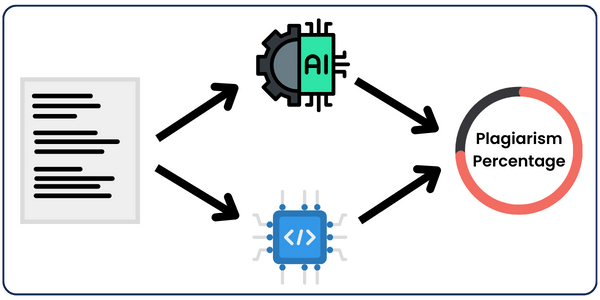

The field of software development is evolving rapidly, and the integration of artificial intelligence (AI) with coding practices is poised to transform the way developers work on their projects. Against this backdrop, there is a new project called Plandex that aims to simplify the process of building complex software. This is an open-source, terminal-based AI coding engine that utilizes the capabilities of OpenAI. It represents a significant advancement in coding efficiency and project management.

Plandex is a tool that automates the routine tasks of coding, allowing developers to concentrate on more innovative and challenging assignments. It was developed by a programmer who found the tedious process of constantly copying and pasting code between ChatGPT and other projects to be inconvenient. Plandex is exceptional, not only because it can handle intricate tasks that involve multiple files and steps but also because of its unique approach to managing the inevitable errors and the iterative nature of coding.

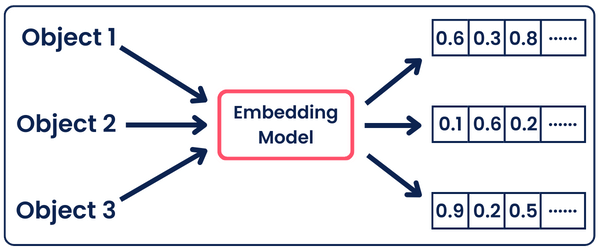

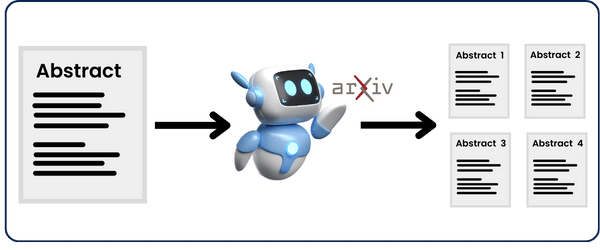

Plandex utilizes long-running agents that break down large tasks into manageable subtasks, methodically implementing each one. This approach ensures that tasks requiring extensive multi-file operations are completed efficiently, transforming how developers tackle their backlogs, explore new technologies, and overcome coding obstacles.

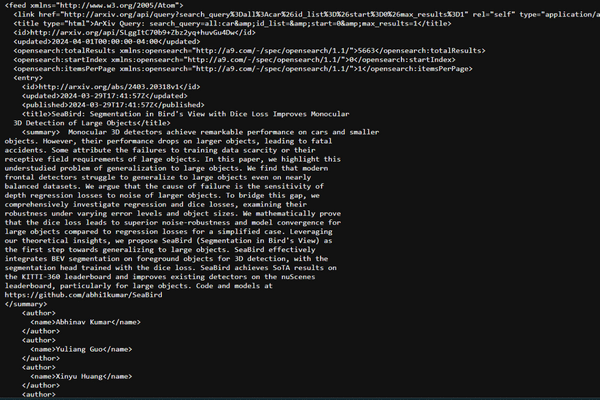

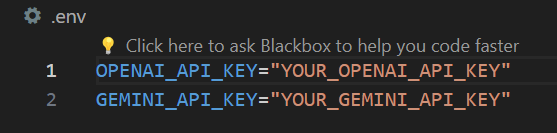

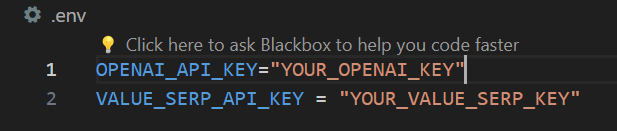

One of the key features of Plandex is its integration with the OpenAI API, requiring users to provide their API key. However, its roadmap includes support for other models, such as Google’s Gemini and Anthropic’s Claude, as well as open-source models, indicating a future where Plandex becomes even more versatile and powerful.

The Plandex project offers a range of functionalities tailored to enhance the coding experience:

- The ability to build complex software functionalities beyond mere autocomplete.

- Efficient context management within the terminal, enabling seamless updates of files and directories to ensure the AI models have access to the latest project state.

- A sandbox environment for testing changes before applying them to project files, complete with built-in version control and branching capabilities for exploring different coding strategies.

- It is compatible across Mac, Linux, FreeBSD, and Windows, running as a single binary without dependencies.

Plandex is more than just a tool, it represents a great help for developers for reducing the “copy-pasting madness” that currently affects modern software development. By providing a platform where developers can experiment, revise, and select the best approach without the need for manual context management, Plandex is leading the way towards a new era of software development.

Key Takeaways

- Plandex is an open-source, AI-powered coding engine designed to streamline the development of complex software projects.

- It leverages the OpenAI API to automate tasks across multiple files, enhancing productivity and focus for developers.

- Unique features like version control, sandbox testing, and efficient context management in the terminal set Plandex apart in the coding tools landscape.

- By minimizing the tedious aspects of coding and focusing on automation and efficiency, Plandex represents a significant advancement in the integration of AI into software development.