Job title: Technology Consulting (BTA) -Senior Technical Business Analyst – Manager

Company: EY

Job description: Technology Consulting (BTA) – Senior Technical Business Analyst – Manager General information Location [Dublin…] Business area Technology Consulting Contact type Permanent / Full-time Working model Hybrid Candidates must have a lawful…

Expected salary:

Location: Southside Dublin

Job date: Sat, 18 May 2024 22:08:16 GMT

Apply for the job now!

AIML – Site Reliability Engineer, ML Platform & Technology at Apple – Singapore, Singapore, Singapore

Summary

Posted: May 19, 2024

Role Number:200552108

Apple is a place where extraordinary people gather to do their best work. Together we create products and experiences people once couldn’t have envisioned — and now can’t imagine living without. If you’re excited by the idea of making an impact, joining a team where we pride ourselves in being one of the most diverse and expansive companies in the world, a career with Apple might be your dream job!

If you wish to play a part in revolutionizing how people use their computers and mobile devices; build ground breaking technology for algorithmic search, machine learning, natural language processing & artificial intelligence; and work with the teams building the most scalable big-data systems in existence. This is the role for you!

Key Qualifications

- Skills or experience in a Site Reliability Engineering, observability or ML Ops focused role supporting internet services and distributed systems

- Proficiency in using Go, Python or other higher-level languages for automation, observability and infrastructure management

- Understanding of building and supporting telemetry, observability and logging solutions for incident, cost and performance management

- Familiarity with infrastructure or dashboards as code and provisioning tools for Kubernetes and cloud based services

- Working knowledge of open source or commercial monitoring and observability frameworks and platforms such as ELK, Splunk, OpenCensus, Datadog

- Working knowledge of ML Ops systems and tools advantageous

- Good interpersonal skills shown through previous projects or assignments

Description

– Monitor production, staging and development environments for a myriad of services in an agile and dynamic organization.

– Employ metrics for data driven solutions for reliability, performance and service insights

– Design, implement, and extend automation tools for monitoring, logging, ML and data processing pipelines

– Strive to improve the stability, security, efficiency and scalability of production systems by applying software engineering practices.

– Resolve future needs for capacity and investigate new features and products.

– Strong problem solving ability will be used daily; a successful Engineer will take steps on self-initiative basis to isolate issues and resolve root cause through investigative analysis.

– Responsible for writing justifications, incident reports, best practices documentation and solution specifications.

Education & Experience

Bachelor Degree in Computer Science or Computer Engineering or equivalent. Fresh Graduates with relevant industry or internship exposure are welcome to apply.

Sam Altman and Bill Gale on Taxation Solutions for Advanced AI

We were joined by Sam Altman and William G. Gale for the first GovAI seminar of 2022. Sam and William discussed Sam’s blog post ‘Moore’s Law for Everything‘ and taxation solutions for advanced AI.

You can watch a recording of the event here and read the transcript below.

Anton Korinek 0:00

Hello, I’m Anton Korinek. I’m the economics lead of the Centre for the Governance of AI which is organizing this event, and I’m also a Rubenstein Fellow at Brookings and a Professor of Economics and Business Administration at the University of Virginia.

Welcome to our first seminar of 2022! This event is part of a series put on by the Centre for the Governance of AI dedicated to understanding the long-term risks and opportunities posed by AI. Future seminars in the series will feature Paul Scharre on the long-term security implications of AI and Holden Karnofsky on the possibility that we are living in the most important century.

It’s an honour and a true pleasure to welcome our two distinguished guests for today’s event, Sam Altman of OpenAI and Bill Gale of Brookings. Our topic for today is [ensuring] shared prosperity in an age of transformative advances in AI. This is a question of very general interest. I personally view it as the most important economic question of our century. What made us particularly excited to invite Sam for this is a blog post that he published last year, entitled “Moore’s Law for Everything,” to which we have linked from the event page. In his post, Sam describes the economic and social challenges that we will face if advanced AI pushes the price of many kinds of labour towards zero.

The goal of today’s webinar is to have a conversation between experts on technology and on public policy, represented here by Sam and by Bill, because the two fields are often too far apart, and we believe this is a really important conversation. Sometimes technologists and public policy experts even speak two different languages. For example, we will be talking about AGI in today’s webinar, and that has two very different meanings in the two fields. In public policy, specifically in US tax policy, AGI is “Adjusted Gross Income” and is used to calculate federal income taxes. In technology circles, AGI means “Artificial General Intelligence.” To be honest, I have mixed feelings about both forms of AGI.

I want to start our event today with Sam to hear about the transformative potential of AI. But let me first introduce Sam. Sam is the CEO of OpenAI, which he co-founded in 2015, and which is one of the leading AI companies focused on the development of AGI that benefits all of humanity. Sam was also a former president of Y Combinator.

Sam, OpenAI has made its name by defining the cutting edge of large language models. To give our conversation a technical grounding, can you tell us about your vision for how we will get from where we are now to something that people will recognize as human-level AI or AGI? And are you perhaps willing to speculate on a timeline?

Sam Altman 3:18

First of all, thanks for having me. I am excited to get to talk about the economic and policy implications of [AI], as we spend most of our time really thinking hard about technology and the very long-term future. The short and medium-term challenges to society are going to be immense. It’s nice to have a forum of smart people to talk about that. We’re looking for as many good ideas here as we can find.

There are people who think that if you just continue to scale up large language models, you will get AGI (not the tax version of “AGI”!). We don’t think that is the most likely path to get there. But certainly, I think we will get closer to AGI as we create models that can do more: models that can work with different modalities, learn, operate over long time horizons, accomplish complex goals, pick the data they need to train on to do the things that a human would do, read books about a specific area of interest, experiment, or call a smart friend. I think that’s going to bring us closer to something that feels like an AGI.

I don’t think it will be an all at once moment; I don’t think we’re gonna have this one day of [AGI] takeoff or one week of takeoff. But I do expect it to be an accelerating process. At OpenAI we believe continuous [AGI] deployment and a roughly constant rate of change is better for the world and the best way to steward AGI’s [emergence]. People should not wake up [one morning] and say, “Well, I had no idea this was coming, but now there’s an AGI.” [Rather], we would like [AGI development] to be a continuous arc where society, institutions, policy, economics, and people have time to adapt. And importantly, we can learn along the way what the problems are, and how to align [AI] systems, well in advance of having something that would be recognized as an AGI.

I don’t think our current techniques will scale without new ideas. But I think there will be new research [at a] larger scale and complex systems integration engineering, and there will be this ongoing feedback loop with society. Societal inputs and the infrastructure that creates and trains these models, all of that together will at some point become recognizable as an AGI. I’m not sure of a timeline, but I do think this will be the most important century.

Anton Korinek 6:03

Thank you. Let’s turn towards the economic implications. What do you view as the implications of technological advances towards AGI for our economy?

Sam Altman 6:18

It’s always hard to predict the details here. But at the highest level, I expect the price of intelligence—how much one pays for the [completion of] a task which requires a lot of thinking or intellectual labour—to come way down. That affects a lot of other things throughout the system. At present, there’s a level [of the complexity of tasks] that no one person—or group of people that can coordinate well—is smart enough to [perform], and there’s a whole lot of things that [currently] don’t happen. And as these [tasks can be performed using AI], it will have a ton of positive implications for people.

It will also have enormous implications for the wages for cognitive labour.

Anton Korinek 7:10

You titled your blog post on this topic, “Moore’s Law for Everything.” Could you perhaps expand a little bit on what “Moore’s Law for Everything” means to you?

Sam Altman 7:31

The cost of intelligence will fall by half every two years—or 18 months, [depending on which version] of Moore’s law you consult. I think that’s a good thing. Compound growth is an incredibly powerful force in the universe that almost all of us underestimate, even those of us who think we understand how important it is. [This intelligence curve] is equally powerful: and this idea, that we can have twice as much of the things we value every two years. This will [allow for not just] quantitative jumps but also qualitative ones, [which are] things that just weren’t possible before. I think that’s great.

If we look at the last several decades in the US, [we can] think about what wonderful things have been accomplished by the original [version of] Moore’s law. Think about how happy we are to have that. I was just thinking today what the pandemic would have been like if we all didn’t have these massively powerful computers and phones that so much of the world can really depend on. That’s just one little example. Contrast that with industries that have had runaway cost disease and how we feel about those.

We must embrace this idea that AI can deliver a technological miracle, a revolution on the order of the biggest technological revolutions we’ve ever had. [This revolution is] how society gets much better. [However], the challenges society has faced at this moment feel quite huge. People are understandably quite unhappy about a lot of things. I think lack of growth underscores a lot of those and if we can restore that [growth, through] AI cutting the cost of cognitive labour, we can all have a great rate of progress, and a lot of the challenges in the world can get much better.

Anton Korinek 9:30

This is really fascinating. Cutting the cost of everything by 50% every two years, or doubling the size of the economy every two years, no matter which way we put it, is a radical change from the growth rates that we face today.

Sam Altman 9:48

Society doesn’t do a good job with radical ideas anymore. We don’t think about them. We no longer kind of seem to believe they’re possible. But sometimes, technology is just powerful [enough] and we get [radical change] anyway.

Anton Korinek 10:00

Some people assert that once we have systems that reach the level of AGI, there will be no jobs left whatsoever, because AI systems will be able to do everything better: they will be better academics, better policy experts, and even better CEOs. What do you think about this view? Do you think there will be any jobs left? If so what kinds of jobs would they be?

Sam Altman 10:25

I think there will be new kinds of jobs. There will be a big class of [areas] where people want the connection to another human. And I think that will happen. We’re seeing the things that people do when they have way more money than they need than they can spend, and they still want to buy status. I believe the human desire for status is unlimited. NFTs are a fascinating case study, and we can see more things [headed] in that direction. [However], it’s hard to sit here and predict the jobs on the other side of the revolution. But I think we can [observe] some things about human nature that will help us [predict] what they might be.

It’s always been a bad [prediction], it’s always been wrong to say that after a technological revolution there will be no jobs. Jobs just look very different on the other side. [However], in this case, I expect jobs to look the most different of any of the technological revolutions we’ve seen so far. Our cognitive capabilities are such a big part of what makes us human—they are the most remarkable of all of our capabilities—and if [cognitive labour] gets done by technology, then it is different. [But], I think, we’ll find new jobs which will feel really important to the people in the future (and will seem quite silly and frivolous to us, in some cases). But there’s a big universe out there, and we or our descendants are going to hopefully go off and explore that and there’s going to be a lot of new things in that process.

Anton Korinek 11:58

That’s very interesting.

I’ll move to the realm of public policy now. One of the fundamental principles of economics is that technology determines how much we can produce, but that our institutions determine how this is distributed. You wrote that a stable economic system requires growth and inclusivity. I imagine growth will emerge naturally if your technological predictions materialize. But what policies do you advocate to make that growth inclusive?

Sam Altman 12:31

Make everybody an owner. I am not a believer in paternalistic institutions deciding what people need. I think [these systems] end up being wasteful and bureaucratic [along with being] mostly wrong about how to allocate [gains]. I also do not believe [we can maintain a] long-term, successful, capitalist society in which most people don’t own part of the upside.

[However], I am not an economist and even less a public policy expert, so I think the part you should take seriously about the Moore’s Law essay is the technological predictions, which I think [may be] better than average, while my economic and policy predictions are probably bad. I meant [these predictions to serve] as a starting point for the conversation and as a best guess at predicting where things will go. But I’m well out of my depth.

I feel confident that we need a society where everyone feels like an owner. The forces of technology are naturally going to push against that, which I think is already happening. In the US, something like half of the country owns no equities (or land). I think that is really bad. A version of the policy I would like is that rather than having increasingly sclerotic institutions, that I think have a harder time keeping up—given the rate of change and complexity in society, [because] they say we’ll have one program and [then change it to another and then another—we must find a way to say,” Here’s how we’re going to redistribute some amount of ownership in the things that matter, so everyone can participate in the updraft in society.”

Anton Korinek 14:34

That’s very thought-provoking, to redistribute ownership, as opposed to just redistributing the output itself. Now, before we turn over the discussion to Bill, let me ask you one more question: what do you think about the political feasibility of proposals like redistributing ownership? Let me make it more concrete: what could we do now to make a solution like what you are describing politically feasible?

Sam Altman 15:06

I feel so deeply out of my depth there that I hesitate to even hazard a guess. But it seems to me the Overton Window is expanding and could expand a lot more. I think people are ready for a real change. Things are not working that well for a lot of people. I certainly don’t remember people being this unhappy in the US in my lifetime, but maybe I’m just getting old and bitter.

Anton Korinek 15:40

That’s a very honest thing to say. I’m afraid none of us is a real expert on all these changes, because as you say, they are so radical that they are hard to conceive of and [it is] hard to imagine what they will lead to.

Thank you for this fascinating initial conversation, Sam.

Now I’ll turn it over to Bill. Bill is the Miller Chair in Federal Economic Policy and a Senior Fellow at Brookings. He is an expert on tax policy and fiscal policy and a co-director of the Tax Policy Center of Brookings and the Urban Institute. Bill has also been my colleague at Brookings for the past half-year, and I’ve had the pleasure to discuss some of these themes with him.

Bill, Sam has predicted that Moore’s law will hold for everything if AGI is developed, and just to be clear, I mean Artificial General Intelligence. Now, economists have long emphasized that there is a second force that runs counter to Moore’s law which has slowed down overall productivity increases, even though we have had all these fabulous technological advances in so many areas since the onset of the Industrial Revolution. And the second force is Baumol’s cost disease. Can you explain a little bit more about this second force? And what would it take to neutralize it, so that Moore’s law can truly apply to everything?

William Gale 17:12

Thank you. It’s a pleasure to be here and to read the very stimulating proposal that Sam put forward. I am not an AI expert but I’ve read a few pieces about it in the last week. I think there’s huge potential for tax issues here so I’m very excited about being part of this discussion.

Let me answer your question in three parts. The first part is that generally, economists think technological change is a good thing: it makes labour more productive. There are adjustment costs and if we get things right we would compensate people [for these], though we normally don’t. In the long run, technological change has been not just a good but a fantastic thing. We’ve had 250 years of technological change. At the same time, the economy has steadily increased and people have steadily been employed.

Then AI comes along. What’s different about AI relative to other technology? The answer I think is proposed, or expected, [is the] speed of the adjustment in scale and in scope. For example, if driverless cars took place instantly, and all people that were involved—the Uber and Lyft drivers—lost their jobs overnight, that would be very economically disruptive. [However], if that shift happens gradually, over the course of many years, then those people cycle out of those jobs. They [can] look for new jobs, and they’re employed in new sectors. The speed with which AI is being discussed, or the speed of the effects that AI might have, is actually a concern. From a societal perspective, I think it’s possible that technological change could go too fast, even though generally we want to increase the rate of technological change. We should be careful what we wish for.

Baumol’s cost disease applies to things like—just to give an extreme example—the technology for giving haircuts, which probably hasn’t changed in the last 300 years—it still takes the same amount of time and they use the same [tools]. It’s hard to see the productivity of that doubling every couple of years. Of course, that’s a silly example. But in industries like healthcare and education, which have a lot of labour input and a lot of human contact, you might think that Moore’s law wouldn’t come into effect as fast as it would in the computer industry for example. I’m not reading Sam’s paper literally—that the productivity of everything is going to double in two years—but if the productivity of 50 percent of the economy doubled every four years, I think [Sam] would claim a victory, [because] that would be a massive amount of change. There are forces pulling against the speed of technological change. And to me, as an economist, the question is: how different is AI from all the other technological changes we’ve had since the Industrial Revolution?

Anton Korinek 20:24

Thank you, Bill. Now, let’s turn to public policy responses if AGI is developed. If the price of intelligence—as Sam is saying—and therefore the price of many, maybe most, forms of labor converges towards zero, what is our arsenal of policy responses to this type of AGI? And let’s look at this question in both the realm of fiscal spending and in the realm of taxation.

William Gale 20:55

If labour income goes to zero, I don’t know. That’s just a totally different world and we would need to rethink a lot. But if we’re simply moving in that direction faster, then there’s a couple of things to be said on the tax and the spending side. I want to highlight something Sam wrote about the income tax: that it would be a bad instrument to load up on in this case. [Sam] makes the point, in his paper, that the income tax has been moving toward labour and away from capital. [Along with the] payroll tax, loading up on the income tax means more tax on labour. It’s a subtle point that is not even well understood in the tax world, but I think [Sam is] exactly right: the income tax is an instrument to load new revenues on the labour portion of income.

On the spending side, there’s a variety of things that people suggest, some to accelerate the change, like giving people new education and training, and some to cushion the change, like a universal basic income, as Sam wrote in his paper. I actually am much more sympathetic to UBI than most economists are, and not as a replacement for existing subsidies, but as a supplement, even before considering the potential downside of the AI revolution.

The other thing we could do—which sounds good in theory but is harder to implement [in practice]—is to have a job guarantee. With a federal job guarantee, the whole thing depends on what wage we guarantee people. If you guarantee a job at $7.25 an hour, that’s a very different proposal than guaranteeing a job at $15 per hour. Lastly, the classic economic solution to this is wage subsidies. Think of the earned income tax credit, and scale it up massively. For example, somebody making $10 an hour would get a supplement from the government for two times that. This is a little loose, but it’s essentially a UBI for people that are working. Our welfare system tends to focus on benefits for the working poor; it does not provide good benefits for the non-working poor. So some combination of UBI and wage subsidy could cushion a lot of people and give incentives to work.

Anton Korinek 23:29

Let me focus on the tax side. Can you tell us more about what the menu of tax instruments is that we would be left with if we don’t want to tax labour? And how would they compare?

William Gale 23:43

Sure. The obvious candidate is the wealth tax. Sam has proposed a variant of a wealth tax, which is, literally, where the money is. Wealth taxes have administrability issues. Sometimes a good substitute for a wealth tax is a consumption tax, which comes in different forms. Presumably, if people are generating wealth it’s because they want to consume the money. If they just want to save and create a dynasty, a consumption tax doesn’t [tax] that. You can design [taxes], especially in combination with a universal basic income, that on net hit high-income households very hard and actually subsidize low-income households. A paper that I wrote a couple years ago showed that a value-added tax and a UBI can [produce] results that are more progressive than the income tax itself.

Anton Korinek 24:44

Now, let’s turn to a few specific proposals. For example, Bill Gates has advocated a robot tax. Sam has proposed the American Equity Fund and a substantial land tax in his blog post “Moore’s Law for Everything.” What is your assessment of these proposals, especially proposals in the realm of capital taxation? What would you propose if AGI were developed and you were tasked with reforming our tax and spending policies?

William Gale 25:17

Both the Gates proposal and the Altman proposal are motivated by good thoughts. The difference is that I think the Gates proposal is a really bad idea. I don’t understand what a robot is. If it’s any labour-saving technology, then your washing machine is a robot, your PC is a robot, and your operating system—your Microsoft Windows—is a robot. I don’t think we want to tax labour-saving technology in particular. Because most of our policies go toward subsidizing investment. Turning around and then taxing [this same investment] is counterproductive and would create complicated incentives, so I don’t think it’s a good idea.

I love the spirit behind Sam’s wealth tax proposal. I love the idea of making everybody an owner. The issue with wealth taxes is the ability to administer them. For example, if you taxed public corporations, private businesses, and land, [then] people [would] move their money into bank accounts and into gold, art, and yachts. This is the wealth tax debate in a nutshell. Some people say, “You should tax all wealth.” Then [other] people [say], “Well, you can’t tax all wealth, because how are you going to come up with the value of things not exchanged in markets? How are you going to do that every year for every person?” The “throw-your-hands-up-in-the-air” answer is: “Well, we’re just not going to tax it,” and that’s a wealth tax where you just erode some of the base.

Sam is arguing there are certain components of wealth that we can tax, corporation market value and land, which are two good targets. My nerdy, wonky tax concerns are in the weeds about the administrability of the tax and the amount of avoidance and tax shifting it would cause. [However], I really like the general idea of saying, “Here are these changes. They’re going to displace some people and greatly benefit other people.” Let’s use—as you’ve said, Anton—the institutions that we have to offset some of these changes and share the wealth so that everyone can be better off from AI rather than AI causing the immiseration of a substantial share of the population.

Sam Altman 27:51

A point that I cut from the essay, but that I meant to make more clearly, is that a wealth tax on people would be great but is too difficult to administer. A very nice thing about a wealth tax on companies, instead of on people, is that [companies have] a share price. Everyone is aligned, [because] everyone wants [the share price] to go up. It’s easy to take some [of it]. I think [this tax] would be powerful and great.

[Though] I think it didn’t come through, part of my hope with the proposal [was to emphasize] that there are two classes of things that are going to matter more than anything else. Sure, people can buy yachts and art, but it’s not clear to me why stashing away a billion dollars and not spending [that money] matters; [it just means] you took [money] out of the economy and made everybody else’s dollar worth more. If you want to buy a boat, that’s fine, but [the boat] you buy won’t go up in value, it won’t compound, and it won’t create a runaway effect. The design goal was administrability and [a focus] on where big wealth generation [will occur] in the future, which I think is [in] these two areas while trading off perfect fairness in taxing [goods like] art and boats.

Anton Korinek 29:04

It seems we have alignment on this notion that some sort of wealth taxes are very desirable, [but] that there are difficulties for certain classes of wealth. Bill, would you like to add anything more to this?

William Gale 29:17

Oh, no. Let’s have a discussion. I think there are a lot of interesting issues raised and I’d be happy to respond to or clarify anything that I said earlier.

Anton Korinek 29:28

Great. Let’s continue with the Q&A. Please raise your virtual hand and get ready to unmute yourself if you have questions that you would like to pose to Sam or Bill. Also feel free to add your question into the regular chat if you prefer that I read it out.

I see we have a question from Robert.

Robert Long 29:57

My question is from the administration side, [and I ask it], as an outsider, to both of you. In his piece, Sam writes, “There will obviously be an incentive for companies to escape the American Equity Fund Tax by offshoring themselves. But a simple test involving a percentage of revenue derived from America could address this concern.” As someone who doesn’t know about taxes, this section confused me. It wasn’t clear to me how that would be simple or how that would work. I am looking for more detail from either of you about how Sam’s proposal could work. For example, what percentage of Alphabet’s revenue is derived from America and how do we calculate that? Thanks.

Sam Altman 30:40

I think companies have to report how much of their revenue [comes] from different geographies. The hope is that eventually, the whole world realizes this [system] is a good idea and we agree on a global number so that every tax is at the same rate and there’s no reason to move around. But [this vision] is probably a pipe dream and there will be at least one jurisdiction who says come here.

William Gale 31:54

I wrote a 280-page book on tax and fiscal policy and presented it to a bunch of tax economists. Every question I got was on the administrability of the estate tax reforms I proposed. [Though tax economists] can drill down into the details, I don’t want to do that here. [Instead], I want to [focus on] the big picture. The [key] idea is that the tax is essentially on market value; it could be paid in shares or in taxes. The international aspects of it—I want to emphasize—are solvable. In the US’s current corporate tax, we tax foreign corporations that do business in the US on their US income.

Someone just [wrote] in [with] a comment about formulary apportionment. Again, this has the feature—like this proposal—that it doesn’t let the perfect be the enemy of the good. [Much of] the time in tax policy, people shoot for the perfect policy, [but] they never get it, and as a result they end up with not even a good policy.

Anton Korinek 34:09

Thank you. Let’s continue with Daniel followed by Markus and Jenny.

Daniel Susskind 34:20

Terrific. Thank you, Anton. A real pleasure to be with everyone this evening. You have spoken about the distribution problem: how to best share the prosperity created by new technologies. I’d be really interested in your thoughts on a different problem, which is what I call the “contribution problem.” It seems to me that today, social solidarity comes from a feeling that everybody is pulling their economic weight for the work that they do and the taxes that they pay. And if people aren’t working, there’s an expectation that they ought to go actively look for work, if they’re willing and able to do so. One of my worries about universal basic income or universal basic assets—where everybody might have a stake in the sort of productive assets in the economy—is that it undermines that sense of social solidarity, [because] some people might not be paying into the collective pot through the work that they do. I’m interested to [hear] your reflections on that contribution problem. It seems to me that economists spend a lot of time thinking about distributive justice—about what a fair way to share our income in society is. But we don’t spend enough time thinking about contributive justice, about how we provide everyone with an opportunity to contribute in society and to be seen to be contributing. [Mechanisms] like universal basic income and universal basic assets don’t engage with the contribution problem.

Sam Altman 35:07

First, I strongly agree with the problem framing. I think that universal basic income is only half the solution and “universal basic meaning” or “[universal basic] fulfillment” is equally—or almost more—important. My hope is that the tools of AI will empower people [to create] incredible new art, immersive experiences, games, and useful things created for others. People will love that. It just might not look like work in the way that we think of it.

To get the AGI we want—and [to] make governance decisions about that—we’ll want mass participation. We’ll want tons of people helping to train the AI and thinking about expressing the values we want the AI to incorporate. I hope that this idea—that we are racing toward [AGI] and a set of decisions to make in its creation—will be one of the most exciting projects that humanity ever gets to do and that it will significantly impact the course of the universe.

This is the most important century in that there’s a mission for humanity that can unite us, that all of us can to some degree participate in, and that people really want to figure out how to do. I am hopeful that [this mission] will be a kind of Grand Challenge for humanity and a great uniting force. But I also think [people will engage in] more basic [activities] where they create and make value for their communities.

William Gale 37:41

Questions about the value of work and the conditionality of work have long dominated American politics. We have a long history of supporting the working poor as much as other countries, but [we help] the non-working poor less. Having said that, there’s a great concern that UBI would cause people to drop out, relax all day, and listen to music. The studies don’t suggest that would happen. There may be a small reduction in the labour supply. My interpretation of these studies is that the humane aspects of a UBI—like making sure people have enough to eat and that they have shelter—totally dominate the—what appear to be—modest disincentive effects on the labour supply of the recipients.

If we were really concerned [about this disincentive], we could combine a UBI with a job guarantee. This would be a little more Orwellian because a job requirement [restricts the benefit from being] universal. Alternatively, we could provide a wage subsidy which is like a UBI for working people. Ultimately this is not black and white, and we’ll find some balance between those two. I feel we do a bad job [supporting] the extremely disadvantaged which is why I favour a UBI but coupling it with a wage subsidy would help pull people into the labour force.

Anton Korinek 38:27

Thank you. Markus?

Markus Anderljung 38:29

I had a question for Sam. Sam, you said something that didn’t fit with what I expected you to believe. You said, “Even in a world where we have AGI, people will still have jobs.” Do you mean that we’ll have jobs in the sense that we’ll still have things that we do, even though we won’t contribute economic value, because there’ll be an AI system that could just do whatever the human did? Or is it the case that humans will be able to provide things that the AI system cannot provide, for example, because people want to engage with human, [rather than AI], service providers?

Sam Altman 39:18

I meant the former: [that future jobs won’t be] recognisable as jobs of today and will seem frivolous. But I also think that many things we do today wouldn’t have seemed like important jobs to people from hundreds of years ago. I also think there will still be a huge value placed on human-made [products]; [even today] we prefer handmade to machine-made [goods]. There’s something we like about that. Why do we value a classic car or a piece of art when there could be a perfect replica made? It’s because it’s real and someone made it.

In the very long-term future, when we have, [for example], created self-aware superintelligence that is exploring the entire universe at some significant fraction of the speed of light, all bets are off. It’s really hard to make super confident predictions about what jobs look like at that point. But in worlds of even extremely powerful AI, where the world looks somewhat like it does today, I think we’re going to find many things to do that people value, but they won’t be what we would consider economically necessary from our limited vantage point of today.

Anton Korinek 41:15

Thank you, Sam. Jenny?

Jenny Xiao 41:19

I have a question [about] the global implications of AGI and redistribution. The focus you have is on the US and developed countries. If the US or another developed country develops AGI, what would be the economic consequences for other countries that are less technologically capable? Would there be redistribution from the US and Western Europe to Africa or Latin America? And in this scenario, would it be possible for [less developed countries] to catch up? [Many] of the developing countries today caught up through cheap labour. But [according to] Moore’s Law for intelligence, developed countries will no longer need [cheap labour].

Sam Altman 42:08

I think we will eventually need a global solution. There’s no way around that. We can [perhaps] prototype [policies] in the US, [though I] feel strange [telling] other countries [which policies to adopt]. I think we’ll need a very global solution here.

Anton Korinek 42:33

Bill, would you like to add anything to that?

William Gale 42:38

In the interim, as we’re approaching a global solution, if the US or Europe is ahead of the game, that’s going to result in resource transfers to, rather than away from, the US and Europe. Accelerating technological change means that once [a country advances] farther down the curve than everyone else, [they’re] going to continue down that curve and [more rapidly] increase the gap over time. Anton’s question about that is particularly relevant in an international context.

Anton Korinek 43:19

Thank you. Katya?

Katya Klinova 43:22

Thank you. I’m Katya. I run the Shared Prosperity Initiative at the Partnership on AI. Thank you all for hosting this frank and open conversation. My question builds on Jenny’s: in today’s world, in which a global solution for redistributing ownership doesn’t exist and might not exist for a long [time], what can AI companies meaningfully do to soften the economic world from AI and tackle the medium-term challenge that Sam acknowledged [at the beginning]?

Sam Altman 43:57

While this is low confidence, my first instinct is that countries with low wages today will actually benefit from AI the most. What we don’t like about this vision of AI is that things won’t get much cheaper and we don’t like that wages will fall. I think AI should be naturally beneficial to the poor parts of the world. But I haven’t thought about that in depth and I could be totally wrong.

Anton Korinek 45:03

Thank you. We have another question from Phil. And let me invite anybody else who has questions to raise their hand.

Phil Trammell 45:06

This discussion about AI and AGI has treated the technology in the abstract as if it’s the same wherever it comes from. Looking back, it seems as though if someone besides Marconi had invented the radio a month sooner, nothing would have been different. But if the Nazis had invented atomic weapons sooner, history would have been very different. And I’m wondering which category you think AI falls into? If [AI] is something [in] the atomic bomb category, why is this less featured—at least in economic discussions—about the implications of AI?

Sam Altman 45:46

Most of the conversations I have throughout most of the day are about that. I think it matters hugely how [AI] is developed and who develops [AI] first. I spend an order of magnitude more time thinking about that [issue] than economic questions. But I also think the economic questions are super important. Given that I believe [AI] is going to be continually deployed in the world, I think we also need to figure out the economic and policy issues that will lead to a good solution. So it’s not for a lack of belief in the extreme importance of who develops AI—that’s really why OpenAI exists. But [our current] conversation is focused on the economic and policy lens.

Phil Trammell 46:34

Even for [AI’s] economic implications, it seems who develops [AI] first might matter. [But there appears to be a] segmentation: in strategic conversations, people care about who develops [AI] first, but in [economic conversations, for example when we’re discussing] implications for wage distribution, [we think of AI] as an abstract technology.

Sam Altman 46:53

What I would say is that in the short-term issues, it matters less who develops AI. For long-term issues—if we [develop] AGI—[who develops this AGI] will matter much more. I think it’s a timeframe issue; [that’s] how I would frame it.

Anton Korinek 47:13

We have one question that Zoe Cremer put in the chat which is directed at Sam: How does your proposal, Sam, compare and contrast against Landemore and Tang’s radical proposal for distributed decision mechanisms? She writes, both ownership reform and real democracy result, in some ways, in the same thing. People who carry the risks of intervention actually have real control over those interventions. Is there a reason to prefer moving the world toward land taxation and distributed ownership over distributed decision making and citizens controlling public policies?

Sam Altman 47:59

I think we need to do both of them. I think that they are both really important. One of the most important questions that will come if we develop advanced AI is, “How do we [represent the] world’s preferences?” Let’s say we can solve the technical alignment problem. How do we decide whose values to align the AGI to? How do we get the world’s preferences included and taken into account? Of course, I don’t think we should literally make every decision about how AGI gets used decided in a heat-of-the-moment, passions-running-hot vote, by everybody on earth for each decision. But when it comes to framing the constitution of AI and distributing power within that to people, [we should] set guardrails we’re going to follow.

Anton Korinek 48:55

Bill, any thoughts on this question of how much of the distributional challenge we want to solve through private ownership or public distribution in public decisions?

William Gale 49:07

The problem is that the private system won’t solve the distribution problem—it will create a surplus which it will distribute according to the market system. For the public system, as Sam mentioned earlier, I share this incredible concern about the ability of the public system—and the social and political values in the underlying population—to do anything that’s both substantial and right. Sam’s piece makes this point right at the beginning: if these are big changes, we need policy to go big and actually get it right.

Anton Korinek 50:03

Thank you, Sam and Bill. Ben?

Ben Garfinkel 50:07

Thank you both. I have a question about making people owners as a way of redistributing wealth. What do you see as the significant difference between a system where wealth or capital is taxed and then redistributed in the form of income, but not capital ownership, versus a system where shares of companies are redistributed to people in the country as opposed to just dividends or a portion of the welfare? What’s the importance of actually distributing ownership versus just merely income and wealth?

William Gale 50:36

In the short term, it doesn’t matter much. But my impression is that people would be more likely to save shares than they would be to save cash. So there’s a potential, over the longer term—ten or twenty years—to build assets in a substantial share of the population. As Sam mentioned, a very large proportion of the population does not own equity and does not have a net worth above zero. So proposals like sharing corporate shares, or in a social policy context, baby bonds, could have effects over long periods of time, as you develop new generations that get used to saving. But in the short run, I’m not sure it would make that much of a difference.

Sam Altman 51:37

If we give someone $3,000 or one share of Amazon and then Amazon goes up 25% the next year, in the first case, people [say], “Yeah, fuck Jeff Bezos, why does he need to get richer?” But if they have a share of Amazon, [they say], “That’s awesome! I just benefited from that.” That’s a significant difference. We have not effectively taught just how powerful compound growth is. If people can feel that, I think it’ll lead to a more future-oriented society, which I think is better. I also believe that ownership mentality is really different and then the success of society is shared, not resented. People really want [the success] and work for it to happen. One of the magic things about Silicon Valley is the idea that [if] we compensate people in stock, [then] we get a very different amount of long-term buy-in from the team than we do with high cash salaries.

Anton Korinek 52:44

Thank you, Sam. We have one more question from Andrew Trask. You mentioned that prices fall based on abundance which is created by the cost of intelligence falling, and you recommend that we tax corporations. In the extreme case of automation, what is the purpose of the corporation if it is no longer employing meaningful numbers of humans for primary survival tasks? That is to say, assuming healthy competitive dynamics, will that corporation be charging for its products at all, [or] will its products become free?

Sam Altman 53:23

I have a long and complicated answer to this question, but there’s no way I can [answer] it in one minute. Let’s say that people are still willing to pay for status, exclusivity, and a beautifully engineered thing that solves a real problem for them, over and above the cost of materials and labour into a product today. The corporation can clearly charge for that.

Anton Korinek 53:52

Thank you. That was a great way to squeeze it into one minute.

So let me thank Sam and Bill, for joining us in today’s webinar and for sharing your really thought-provoking ideas on these questions. Let me also thank our audience for joining, and we hope to see you at a future GovAI webinar soon.

Business Development Manager (Engineering Materials)

Job title: Business Development Manager (Engineering Materials)

Company: Elk Recruitment

Job description: Position: Business Development Manager (Engineering Materials) Location: Salary: DOE The Company: Our client… and construction sectors. The Role: The purpose of this role is to work with the Group Business Development Manager, Regional…

Expected salary:

Location: Dublin

Job date: Sat, 18 May 2024 22:57:32 GMT

Apply for the job now!

Putting New AI Lab Commitments in Context

GovAI research blog posts represent the views of their authors, rather than the views of the organisation.

Introduction

On July 21, in response to emerging risks from AI, the Biden administration announced a set of voluntary commitments from seven leading AI companies: the established tech giants Amazon, Google, Meta, and Microsoft and the AI labs OpenAI, Anthropic, and Inflection.

In addition to bringing together these major players, the announcement is notable for explicitly targeting frontier models: general-purpose models that the full text of the commitments define as being “overall more powerful than the current industry frontier.” While the White House has previously made announcements on AI – for example, VP Harris’s meeting with leading lab CEOs in May 2023 – this is one of the most explicit that calls for ways to manage these systems.

Below, we summarize some of the most significant takeaways in the announcement and comment on some notable omissions, for instance what the announcement does not say about open sourcing models or about principles for model release decisions. While potentially valuable, it remains to be seen if the commitments will be a building block for or a blocker to regulation of AI, including frontier models.

Putting safety first

The voluntary commitments identify safety, security, and trust as top priorities, calling them “three principles that must be fundamental to the future of AI.” The emphasis on safety and security foregrounds the national security implications of frontier models, which often sit alongside other regulatory concerns such as privacy and fairness in documents like the National Institute of Standards and Technology AI Risk Management Framework (NIST AI RMF).

- On safety, the commitments explicitly identify cybersecurity and biosecurity as priority areas and recommend use of internal and external red-teaming to anticipate these risks. Senior US cybersecurity officials have voiced concern about how malicious actors could use future AI models to plan cyberattacks or interfere with elections, and in Congress, two Senators have proposed bipartisan legislation to examine whether advanced AI systems could facilitate the development of bioweapons and novel pathogens.

- On security, the commitment recognizes model weights – the core intellectual property behind AI systems – as being particularly important to protect. Insider threats are one concern that the commitment identifies. But leading US officials like National Security Agency head Paul Nakasone and cyberspace ambassador Nathaniel Fick have also warned that adversaries, such as China, may try to steal leading AI companies’ models to get ahead.

According to White House advisor Anne Neuberger, the US government has already conducted cybersecurity briefings for leading AI labs to pre-empt these threats. The emphasis on frontier AI model protection in the White House voluntary commitments suggests that AI labs may be open to collaborating further with US agencies, such as the Cybersecurity and Infrastructure Security Agency.

Information sharing and transparency

Another theme running through the announcement is the commitment to more information sharing between companies and more transparency to the public. Companies promised to share among themselves best practices for safety as well as findings on how malicious users could circumvent AI system safeguards. Companies also promised to publicly release more details on the capabilities and limitations of their models. The White House’s endorsement of this information sharing may help to allay concerns other researchers have previously raised about antitrust law potentially limiting cooperation on AI safety and security, and open the door for greater technical collaboration in the future.

- Some of the companies have already launched a new industry body to share best practices, lending weight to the voluntary commitments. Five days after the White House announcement, Anthropic, Google, Microsoft, and OpenAI launched the Frontier Model Forum, “an industry body focused on ensuring safe and responsible development of frontier AI models.” Among other things, the forum aims to “enable independent, standardized evaluations of capabilities and safety,” and to identify best practices for responsible development and deployment.

- However, the new forum is missing three of the seven companies who agreed to the voluntary commitments – Amazon, Meta, and Inflection – and it is unclear if they will join in the future. Nonetheless, these three could plausibly share information on a more limited or ad hoc basis. How the new forum will interact with other multilateral and multi-stakeholder initiatives like the G7 Hiroshima process or the Partnership on AI will also be something to watch.

- The companies committed to developing technical mechanisms to identify AI-generated audio or visual content, but (apparently) not text. Although the White House announcement refers broadly to helping users “know when content is AI generated,” the detailed statement only covers audio and visual content. From a national security perspective, this means that AI-generated text-based disinformation campaigns could continue to be a concern. While there are technical barriers to watermarking AI-generated text, it is unclear whether these, or other political barriers, were behind the decision not to discuss watermarking text.

Open sourcing and deployment decisions

Among the most notable omissions from the announcement were the lack of details on how companies will ultimately decide whether to open source or otherwise deploy their models. On these questions, companies differ substantially in approach; for example, while Meta has chosen to open source some of their most advanced models (i.e., allow users to freely download and modify them), most of the other companies have been more reticent to open source their models and have sometimes cited concerns about open-source models enabling misuse. Unsurprisingly, the companies have not arrived at a consensus in their announcement.

- For the seven companies, open-source remains an open question. Though the commitment says that AI labs will release AI model weights “only when intended,” the announcement provides no details on how decisions around intentional model weight release should be made. This choice trades off between openness and security. Advocates of open sourcing argue that it facilitates accountability and helps crowdsource safety, while advocates of structured access raise concerns including about misuse by malicious actors. (There are also business incentives on both sides.)

- The commitments also do not explicitly say how results from red-teaming will inform decisions around model deployment. While it is natural to assume that these risk assessments will ultimately inform the decision to deploy or not, the commitments are not explicit about formal processes – for example, whether senior stakeholders must be briefed with red-team results when making a go/no-go decision or whether external experts will be able to red-team versions of the model immediately pre-deployment (as opposed to earlier versions that may change further during the training process).

Conclusion

The voluntary commitments may be an important step toward ensuring that frontier AI models remain safe, secure, and trustworthy. However, they also raise a number of questions and leave many details to be decided. It also remains unclear how forceful voluntary lab commitments will ultimately be without new legislation to back them up.

The authors would like to thank Tim Fist, Tony Barrett, and reviewers at GovAI for feedback and advice.

IFDS Transfer Agency Business Analyst – A1

Job title: IFDS Transfer Agency Business Analyst – A1

Company: State Street

Job description: The Business Analyst is responsible for liaising with business teams in order to elicit, analyze, communicate… and validate requirements for changes in business processes, policies and information systems. As Business Analyst…

Expected salary:

Location: Dublin

Job date: Sat, 18 May 2024 23:06:46 GMT

Apply for the job now!

Webinar: How Should Frontier AI Models be Regulated?

Webinar: How should frontier AI models be regulated?

This webinar centred on a recently published whitepaper “Frontier AI Regulation: Managing Emerging Risks to Public Safety.” The paper argues that cutting-edge AI models (e.g. GPT-4, PaLM 2, Claude, and beyond) may soon have capabilities which could pose severe risks to public safety and that these models require regulation. It describes the building blocks such a regulatory regime would consist of and proposes some initial safety standards for frontier AI development and deployment. You can find a summary of the paper here.

We think the decision of whether and how to regulate frontier AI models is a high-stake one. As such, the webinar featured a frank discussion of the upsides and downsides of the proposals of the white paper. After a brief summary of the paper by Cullen O’Keefe and Markus Anderljung, two discussants – Irene Solaiman and Jeremy Howard – offered comments, followed by a discussion.

Within the webinar the discussants mention Jeremy’s piece “AI Safety and the Age of Dislightenment”, which you can read here.

Speakers:

Irene Solaiman is an AI safety and policy expert. She is Policy Director at Hugging Face, where she is conducting social impact research and leading public policy. She is a Tech Ethics and Policy Mentor at Stanford University and an International Strategy Forum Fellow at Schmidt Futures. Irene also advises responsible AI initiatives at OECD and IEEE. Her research includes AI value alignment, responsible releases, and combating misuse and malicious use.

Jeremy Howard is a data scientist, researcher, developer, educator, and entrepreneur. Jeremy is a founding researcher at fast.ai, a research institute dedicated to making deep learning more accessible, and is an honorary professor at the University of Queensland. Previously, Jeremy was a Distinguished Research Scientist at the University of San Francisco, where he was the founding chair of the Wicklow Artificial Intelligence in Medical Research Initiative.

Cullen O’Keefe currently works as a Research Scientist in Governance at OpenAI. Cullen is a Research Affiliate with the Centre for the Governance of AI; Founding Advisor and Research Affiliate at the Legal Priorities Project; and a VP at the O’Keefe Family Foundation. Cullen’s research focuses on the law, policy, and governance of advanced artificial intelligence, with a focus on preventing severe harms to public safety and global security.

Markus Anderljung is Head of Policy at GovAI, an Adjunct Fellow at the Center for a New American Security, and a member of the OECD AI Policy Observatory’s Expert Group on AI Futures. He was previously seconded to the UK Cabinet Office as a Senior Policy Specialist and served as GovAI’s Deputy Director. Markus is based in San Francisco.

Retail Business Manager – Ireland

Job title: Retail Business Manager – Ireland

Company: Dermalogica

Job description: Retail Business Manager – Ireland €55-57k plus quarterly bonus We are the number 1 professional skincare brand… Centres. Our brand DNA is delivered through outstanding business support, excellent education and revolutionary product in the…

Expected salary: €55000 – 57000 per year

Location: Dublin

Job date: Sun, 19 May 2024 01:56:03 GMT

Apply for the job now!

New Survey: Broad Expert Consensus for Many AGI Safety and Governance Practices

This post summarises our recent paper “Towards Best Practices in AGI Safety and Governance.” You can read the full paper and see the complete survey results here.

GovAI research blog posts represent the views of their authors, rather than the views of the organisation.

Summary

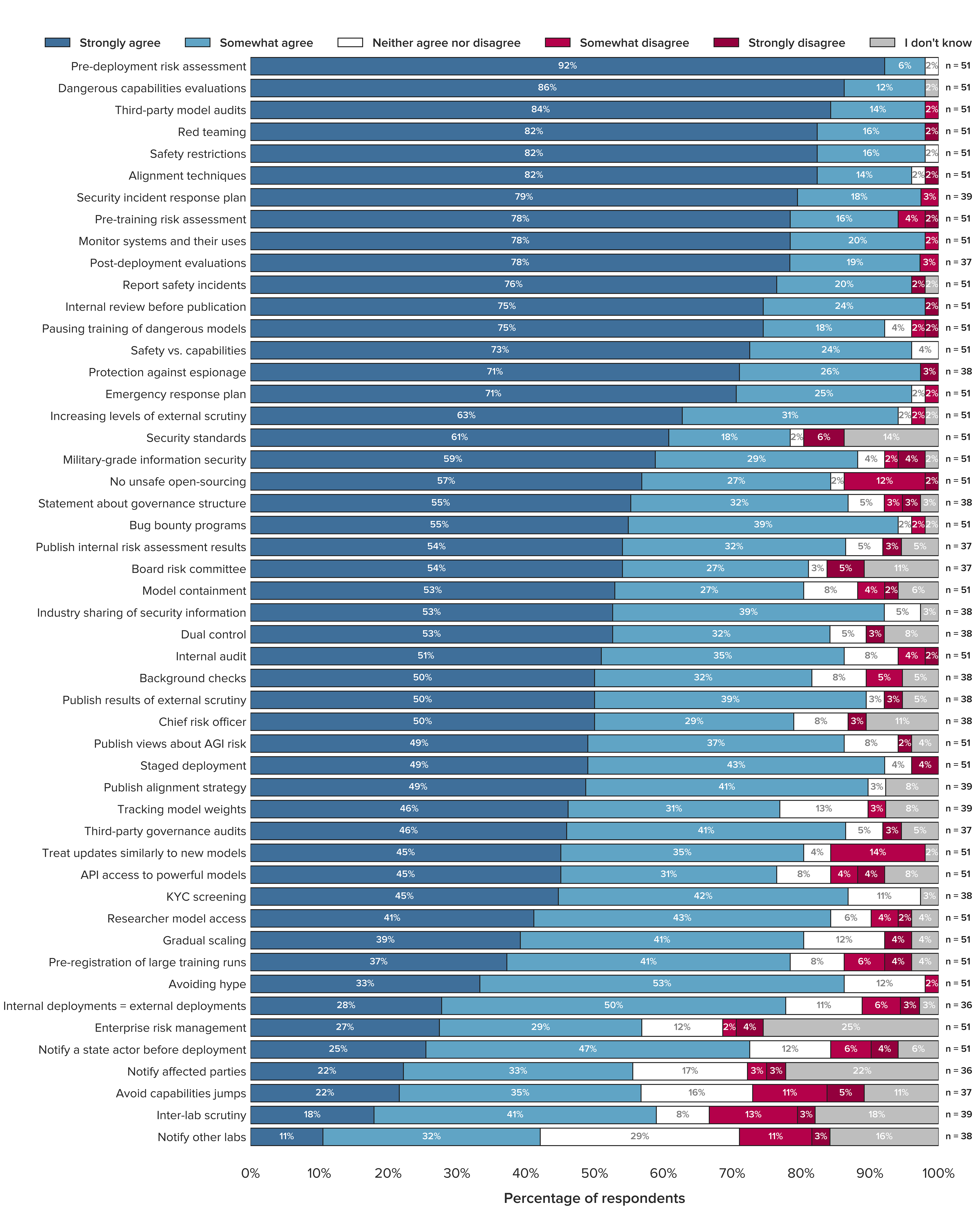

- We found a broad consensus that AGI labs should implement most of the safety and governance practices in a 50-point list. For every practice but one, the majority of respondents somewhat or strongly agreed that it should be implemented. Furthermore, for the average practice on our list, 85.2% somewhat or strongly agreed it should be implemented.

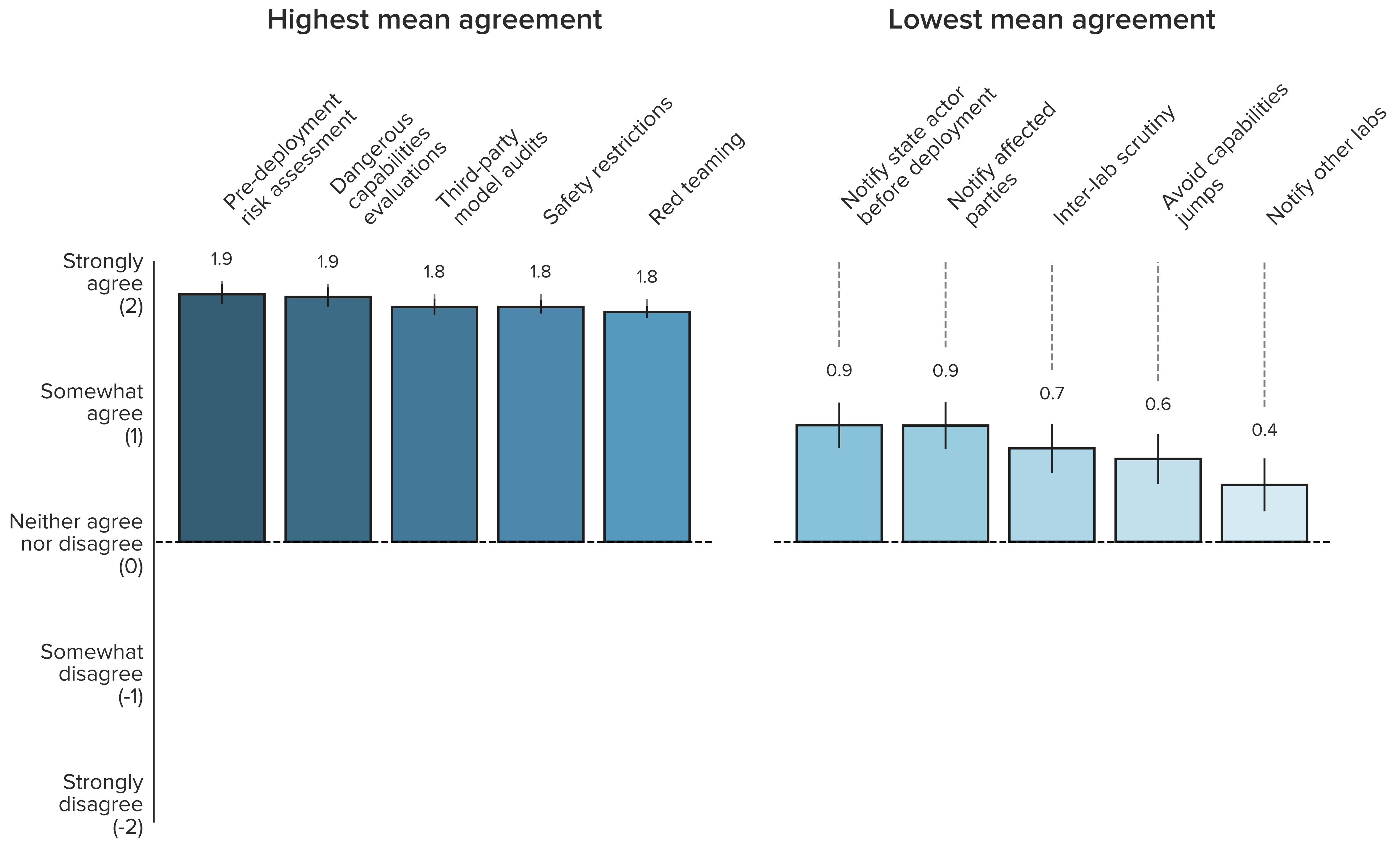

- Respondents agreed especially strongly that AGI labs should conduct pre-deployment risk assessments, dangerous capabilities evaluations, third-party model audits, safety restrictions on model usage, and red teaming. 98% of respondents somewhat or strongly agreed that these practices should be implemented. On a numerical scale, ranging from -2 to 2, each of these practices received a mean agreement score of at least 1.76.

- Experts from AGI labs had higher average agreement with statements than respondents from academia or civil society. However, no significant item-level differences were found.

Significant risks require comprehensive best practices

Over the past few months, a number of powerful and broadly capable artificial intelligence (AI) systems have been released and integrated into products used by millions of people, including search engines and major digital work suites like Google Workspace or Microsoft 365. As a result, policymakers and the public have taken an increasing interest in emerging risks from AI.

These risks are likely to grow over time. A number of leading companies developing these AI systems, including OpenAI, Google DeepMind, and Anthropic1, have the stated goal of building artificial general intelligence (AGI)—AI systems that achieve or exceed human performance across a wide range of cognitive tasks. In pursuing this goal, they may develop and deploy AI systems that pose particularly significant risks. While they have already taken some measures to mitigate these risks, best practices have not yet emerged.

A new survey of AGI safety and governance experts

To support the identification of best practices, we sent a survey to 92 leading experts from AGI labs, academia, and civil society and received 51 responses (55.4% response rate). You can find the names of the 33 experts that gave permission to be listed publicly as participants of the survey below, along with the full statements and more information about the sample and methodology.

Participants were asked how much they agreed with 50 statements about what AGI labs should do on a Likert scale: “Strongly agree”, “somewhat agree”, “neither agree nor disagree”, “somewhat disagree”, “strongly disagree”, and “I don’t know”. We explained to the participants that, by “AGI labs”, we primarily mean organisations that have the stated goal of building AGI. This includes OpenAI, Google DeepMind, and Anthropic. Since other AI companies like Microsoft and Meta conduct similar research (e.g. training very large and general-purpose models), we also classify them as “AGI labs” in the survey and this post.

You can see our key results in the figure below.

Broad consensus for a large portfolio of practices

There was a broad consensus that AGI labs should implement most of the safety and governance practices in the 50-point list. For 98% of the practices, a majority (more than 50%) of respondents strongly or somewhat agreed. For 56% of the practices, a majority (more than 50%) of respondents strongly agreed. The mean agreement across all 50 items was 1.39 on a scale from -2 (strongly disagree) to 2 (strongly agree)—roughly halfway between somewhat agree and strongly agree. On average, across all 50 items, 85.2% of respondents either somewhat or strongly agreed that AGI labs should follow each of the practices.

On average, only 4.6% either somewhat or strongly disagreed that AGI labs should follow each of the practices. For none of the practices, a majority (more than 50%) of respondents somewhat or strongly disagreed. Indeed, the highest total disagreement on any item was 16.2% for the item “avoid capabilities jumps”. Across all 2,285 ratings respondents made, only 4.5% were disagreement ratings.

Pre-deployment risk assessments, dangerous capabilities evaluations, third-party model audits, safety restrictions, and red teaming have overwhelming expert support

The statements with the highest mean agreement were: pre-deployment risk assessment (mean = 1.9), dangerous capabilities assessments (mean = 1.9), third-party model audits (mean = 1.8), safety restrictions (mean = 1.8), and red teaming (mean = 1.8). The items with the highest total agreement proportions all had agreement ratings from 98% of respondents were: dangerous capabilities evaluations, internal review before publication, monitor systems and their uses, pre-deployment risk assessment, red teaming, safety restrictions, and third-party model audits. Seven items had no disagreement ratings at all: dangerous capabilities evaluations, industry sharing of security information, KYC screening, pre-deployment risk assessment, publish alignment strategy, safety restrictions, and safety vs. capabilities.

The figure below shows the statements with the highest and lowest mean agreement. Note that all practices, even those with lowest mean agreement, show a positive mean agreement, that is above the midpoint of “neither agree nor disagree” and in the overall agreement part of the scale. The mean agreement for all statements can be seen in Figure 3 in the Appendix.

Experts from AGI labs had higher average agreement for practices

Overall, it appears that experts closest to the technology show the highest average agreement with the practices. We looked at whether respondents differed in their responses by sector (AGI labs, academia, civil society) and gender (man, woman). Respondents from AGI labs (mean = 1.54) showed significantly higher mean agreement than respondents from academia (mean = 1.16) and civil society (mean = 1.36).

There was no significant difference in overall mean agreement between academia and civil society. We found no significant differences between sector groups for any of the items. We also found no significant differences between responses from men and women—neither in overall mean agreement, nor at the item level. Please see the full paper for details on the statistical analyses conducted.

Research implications

In light of the broad agreement on the practices presented, future work needs to figure out the details of these practices. There is ample work to be done in determining the practical execution of these practices and how to make them a reality. Respondents also suggested 50 more practices, highlighting the wealth of AGI safety and governance approaches that need to be considered beyond the ones asked about in this survey. This work will require a collaborative effort from both technical and governance experts.

Policy implications

The findings of the survey have a variety of implications for AGI labs, regulators, and standard-setting bodies:

- AGI labs can use our findings to conduct an internal gap analysis to identify potential best practices that they have not yet implemented. For example, our findings can be seen as an encouragement to make or follow through on commitments to commission third-party model audits, evaluate models for dangerous capabilities, and improve their risk management practices.

- In the US, where the US government has recently expressed concerns about the dangers of AI and AGI, regulators and legislators can use our findings to prioritise different policy interventions. In the EU, our findings can inform the debate on to what extent the proposed AI Act should account for general-purpose AI systems. In the UK, our findings can be used to draft upcoming AI regulations as announced in the recent white paper “A pro-innovation approach to AI regulation” and to put the right guardrails in place for frontier AI systems.

- Our findings can inform an ongoing initiative of the Partnership on AI to develop shared protocols for the safety of large-scale AI models. They can also support efforts to adapt the NIST AI Risk Management Framework and ISO/IEC 23894 to developers of general-purpose AI systems. Finally, they can inform the work of CEN-CENELEC—a cooperation between two of the three European Standardisation Organisations— to develop harmonised standards for the proposed EU AI Act, especially on risk management.

- Since most practices are not inherently about AGI labs, our findings might also be relevant for other organisations that develop and deploy increasingly general-purpose AI systems, even if they do not have the goal of building AGI.

Conclusion

Our study has elicited current expert opinions on safety and governance practices at AGI labs, providing a better understanding of what leading experts from AGI labs, academia, and civil society believe these labs should do to reduce risk. We have shown that there is broad consensus that AGI labs should implement most of the 50 safety and governance practices we asked about in the survey. For example, 98% of respondents somewhat or strongly agreed that AGI labs should conduct pre-deployment risk assessments, evaluate models for dangerous capabilities, commission third-party model audits, establish safety restrictions on model usage, and commission external red teams. Ultimately, our list of practices may serve as a helpful foundation for efforts to develop best practices, standards, and regulations for AGI labs.

Recently, US Vice President Kamala Harris invited the chief executive officers of OpenAI, Google DeepMind, Anthropic, and other leading AI companies to the White House “to share concerns about the risks associated with AI”. In an almost 3-hour long Senate hearing on May 16th 2023, Sam Altman, the CEO of OpenAI, was asked to testify on the risks of AI and the regulations needed to mitigate these risks. We believe that now is a pivotal time for AGI safety and governance. Experts from many different domains and intellectual communities must come together to discuss what responsible AGI labs should do.

Citation

Schuett, J., Dreksler, N., Anderljung, M., McCaffary, D., Heim, L., Bluemke, E., & Garfinkel, B. (2023, 5th June). New survey: Broad expert consensus for many AGI safety and governance practices. Centre for the Governance of AI. www.governance.ai/post/broad-expert-consensus-for-many-agi-safety-and-governance-best-practices.

If you have questions or would like more information regarding the policy implications of this work, please contact jo***********@go********.ai . To find out more about the Centre for the Governance of AI’s survey research, please contact no************@go********.ai .

Acknowledgements

We would like to thank all participants who filled out the survey. We are grateful for the research assistance and in-depth feedback provided by Leonie Koessler and valuable suggestions from Akash Wasil, Jeffrey Laddish, Joshua Clymer, Aryan Bhatt, Michael Aird, Guive Assadi, Georg Arndt, Shaun Ee, and Patrick Levermore. All remaining errors are our own.

Appendix

List of statements

Below, we list all statements we used in the survey, sorted by overall mean agreement. Optional statements, that respondents could choose to answer or not, are marked with an asterisk (*).

- Pre-deployment risk assessment. AGI labs should take extensive measures to identify, analyze, and evaluate risks from powerful models before deploying them.

- Dangerous capability evaluations. AGI labs should run evaluations to assess their models’ dangerous capabilities (e.g. misuse potential, ability to manipulate, and power-seeking behavior).

- Third-party model audits. AGI labs should commission third-party model audits before deploying powerful models.

- Safety restrictions. AGI labs should establish appropriate safety restrictions for powerful models after deployment (e.g. restrictions on who can use the model, how they can use the model, and whether the model can access the internet).

- Red teaming. AGI labs should commission external red teams before deploying powerful models.

- Monitor systems and their uses. AGI labs should closely monitor deployed systems, including how they are used and what impact they have on society.

- Alignment techniques. AGI labs should implement state-of-the-art safety and alignment techniques.

- Security incident response plan. AGI labs should have a plan for how they respond to security incidents (e.g. cyberattacks).*

- Post-deployment evaluations. AGI labs should continually evaluate models for dangerous capabilities after deployment, taking into account new information about the model’s capabilities and how it is being used.*

- Report safety incidents. AGI labs should report accidents and near misses to appropriate state actors and other AGI labs (e.g. via an AI incident database).

- Safety vs capabilities. A significant fraction of employees of AGI labs should work on enhancing model safety and alignment rather than capabilities.

- Internal review before publication. Before publishing research, AGI labs should conduct an internal review to assess potential harms.

- Pre-training risk assessment. AGI labs should conduct a risk assessment before training powerful models.

- Emergency response plan. AGI labs should have and practice implementing an emergency response plan. This might include switching off systems, overriding their outputs, or restricting access.

- Protection against espionage. AGI labs should take adequate measures to tackle the risk of state-sponsored or industrial espionage.*

- Pausing training of dangerous models. AGI labs should pause the development process if sufficiently dangerous capabilities are detected.

- Increasing level of external scrutiny. AGI labs should increase the level of external scrutiny in proportion to the capabilities of their models.

- Publish alignment strategy. AGI labs should publish their strategies for ensuring that their systems are safe and aligned.*

- Bug bounty programs. AGI labs should have bug bounty programs, i.e. recognize and compensate people for reporting unknown vulnerabilities and dangerous capabilities.

- Industry sharing of security information. AGI labs should share threat intelligence and information about security incidents with each other.*

- Security standards. AGI labs should comply with information security standards (e.g. ISO/IEC 27001 or NIST Cybersecurity Framework). These standards need to be tailored to an AGI context.

- Publish results of internal risk assessments. AGI labs should publish the results or summaries of internal risk assessments, unless this would unduly reveal proprietary information or itself produce significant risk. This should include a justification of why the lab is willing to accept remaining risks.*2

- Dual control. Critical decisions in model development and deployment should be made by at least two people (e.g. promotion to production, changes to training datasets, or modifications to production).*

- Publish results of external scrutiny. AGI labs should publish the results or summaries of external scrutiny efforts, unless this would unduly reveal proprietary information or itself produce significant risk.*

- Military-grade information security. The information security of AGI labs should be proportional to the capabilities of their models, eventually matching or exceeding that of intelligence agencies (e.g. sufficient to defend against nation states).

- Board risk committee. AGI labs should have a board risk committee, i.e. a permanent committee within the board of directors which oversees the lab’s risk management practices.*

- Chief risk officer. AGI labs should have a chief risk officer (CRO), i.e. a senior executive who is responsible for risk management.

- Statement about governance structure. AGI labs should make public statements about how they make high-stakes decisions regarding model development and deployment.*

- Publish views about AGI risk. AGI labs should make public statements about their views on the risks and benefits from AGI, including the level of risk they are willing to take in its development.

- KYC screening. AGI labs should conduct know-your-customer (KYC) screenings before giving people the ability to use powerful models.*

- Third-party governance audits. AGI labs should commission third-party audits of their governance structures.*

- Background checks. AGI labs should perform rigorous background checks before hiring/appointing members of the board of directors, senior executives, and key employees.*

- Model containment. AGI labs should contain models with sufficiently dangerous capabilities (e.g. via boxing or air-gapping).

- Staged deployment. AGI labs should deploy powerful models in stages. They should start with a small number of applications and fewer users, gradually scaling up as confidence in the model’s safety increases.

- Tracking model weights. AGI labs should have a system that is intended to track all copies of the weights of powerful models.*

- Internal audit. AGI labs should have an internal audit team, i.e. a team which assesses the effectiveness of the lab’s risk management practices. This team must be organizationally independent from senior management and report directly to the board of directors.

- No open-sourcing. AGI labs should not open-source powerful models, unless they can demonstrate that it is sufficiently safe to do so.3

- Researcher model access. AGI labs should give independent researchers API access to deployed models.

- API access to powerful models. AGI labs should strongly consider only deploying powerful models via an application programming interface (API).

- Avoiding hype. AGI labs should avoid releasing powerful models in a way that is likely to create hype around AGI (e.g. by overstating results or announcing them in attention-grabbing ways).

- Gradual scaling. AGI labs should only gradually increase the amount of compute used for their largest training runs.

- Treat updates similarly to new models. AGI labs should treat significant updates to a deployed model (e.g. additional fine-tuning) similarly to its initial development and deployment. In particular, they should repeat the pre-deployment risk assessment.

- Pre-registration of large training runs. AGI labs should register upcoming training runs above a certain size with an appropriate state actor.

- Enterprise risk management. AGI labs should implement an enterprise risk management (ERM) framework (e.g. the NIST AI Risk Management Framework or ISO 31000). This framework should be tailored to an AGI context and primarily focus on the lab’s impact on society.

- Treat internal deployments similarly to external deployments. AGI labs should treat internal deployments (e.g. using models for writing code) similarly to external deployments. In particular, they should perform a pre-deployment risk assessment.*4

- Notify a state actor before deployment. AGI labs should notify appropriate state actors before deploying powerful models.

- Notify affected parties. AGI labs should notify parties who will be negatively affected by a powerful model before deploying it.*

- Inter-lab scrutiny. AGI labs should allow researchers from other labs to scrutinize powerful models before deployment.*