The UFO AI Agent aims to seamlessly navigate applications within the Windows OS and orchestrate events to fulfil a user query.

Initial Observations

This Windows OS based AI Agent called UFO can work well as a personal workflow optimiser for suggestions on the most optimal workflow to achieve a task on your PC.

We all have a process through which we interact with our UI…this agent can help optimise this personal workflow.

I once read that when a new type of UI is introduced, like a surface or touch screen, we start interacting with it and over time loose patterns of behaviour are established, which later turns into UI design conventions.

The same is happening with AI agents. Key ingredients of AI Agents are complex task decomposition, creating a sequence of chains. And AI Agent framework creators are converging on a set of good ideas.

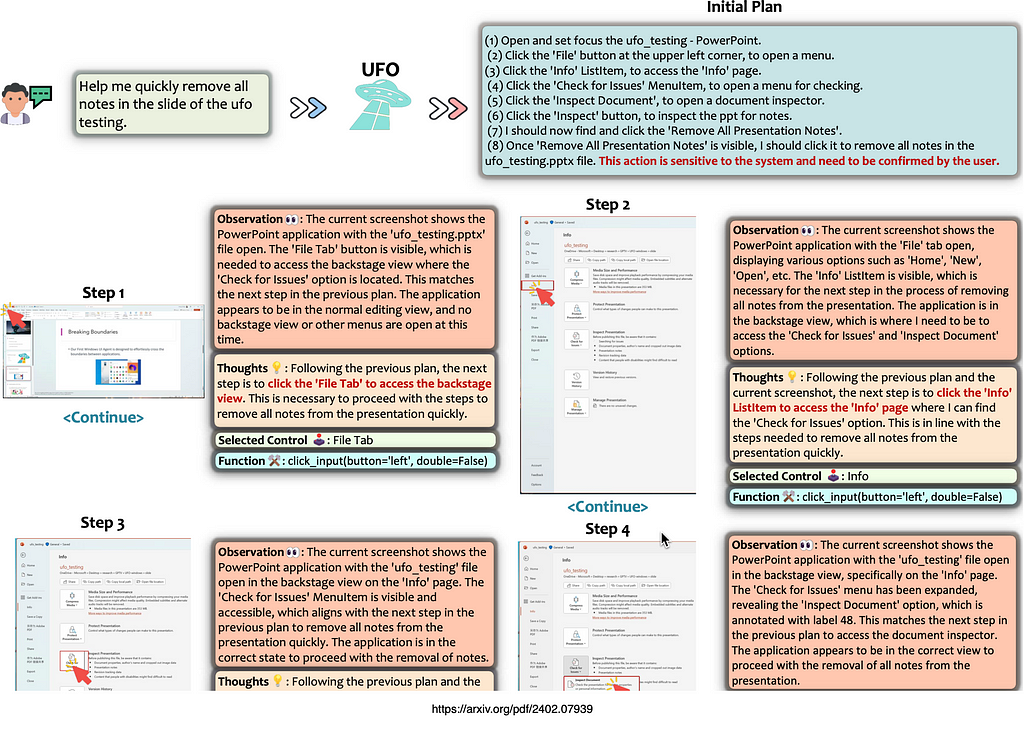

Going through an iterative process of action, observation, thought, prior to taking next step.

AI Agents are also starting to exist within digital worlds, like in this case, Windows OS. Other examples are Apple’s iOS, or a web browser like Web Voyager.

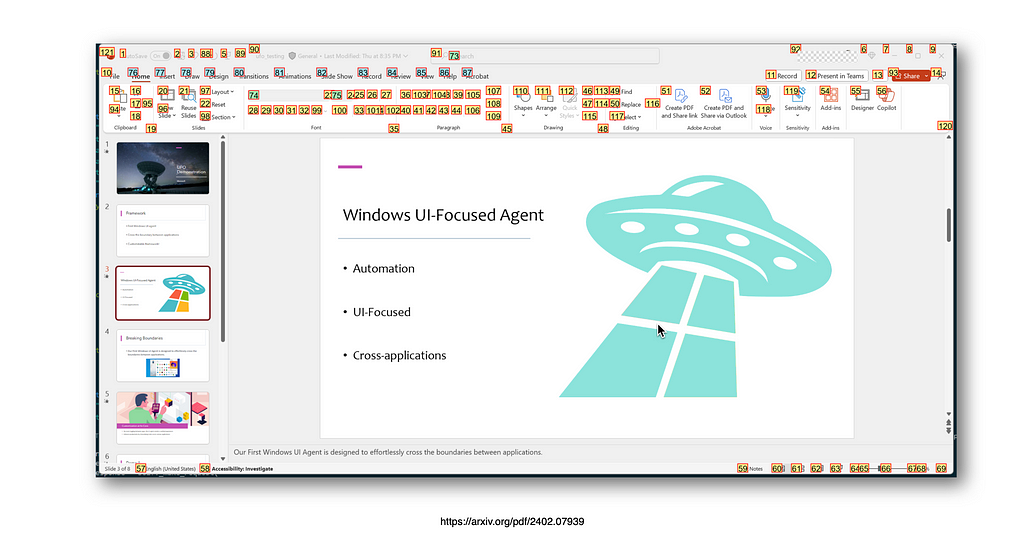

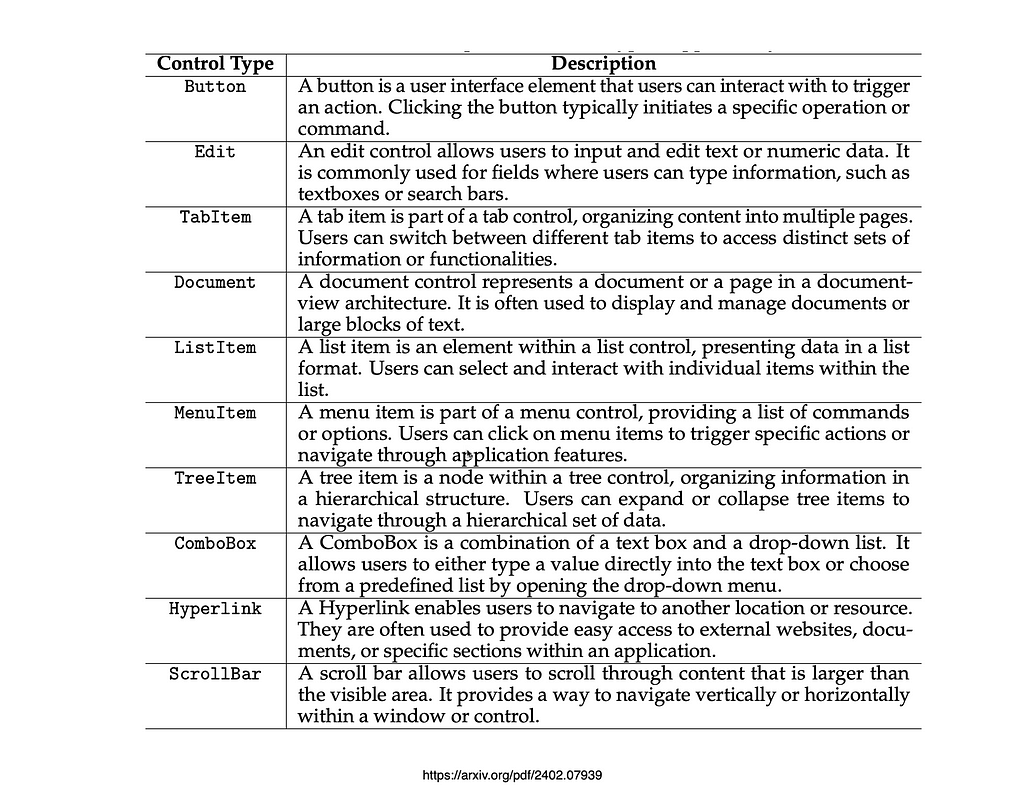

You will see that as we as users have design affordances at our disposal to interact and navigate, these affordances are also available to the AI Agent.

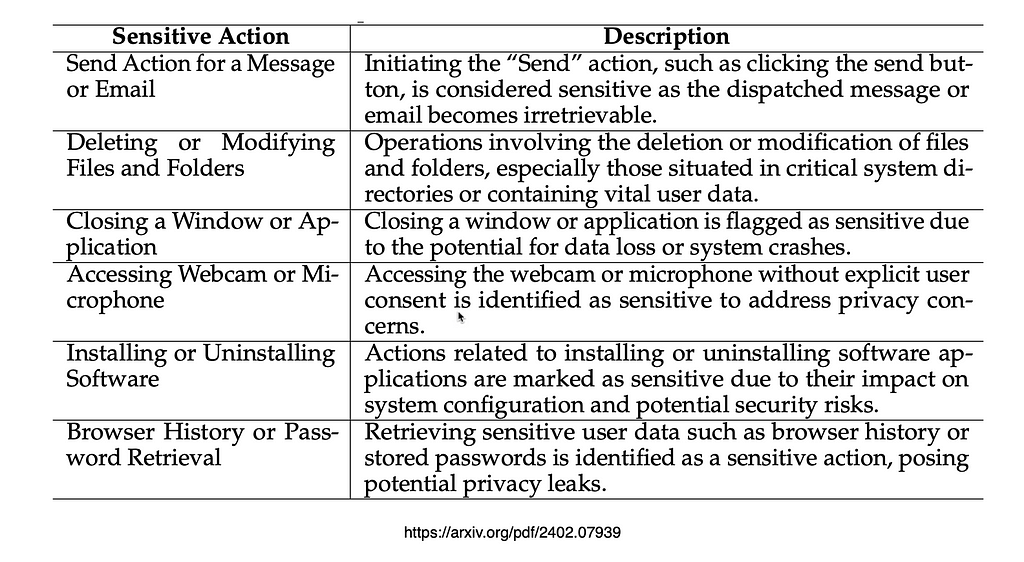

There are also a set of action identified which are potentially high in consequence, like deleting files, or sending an email. The ramifications of these risks will grow considerably when AI Agents are embodied in the real world.

Lastly, quite a while ago I wrote about the ability of LLMs to perform symbolic reasoning. The ability of Language Models to do symbolic reasoning was a feature which I felt did not enjoy the attention it should.

We all perform symbolic reasoning as humans, we observe a room, and are able to mentally plan and project tasks based on what we have seen in a spatial setting. LLMs also have this capability, and visual models were always delivered via a test description. With the advent of vision capabilities in LLMs, images can be used.

The image below shows a common trait in AI Agents with a digital environment, where observation, thought and action are really all language based.

In User interface design, loose patterns of behaviour in time turns into UI design conventions

UFO = “U”I-”Fo”cused AI Agent

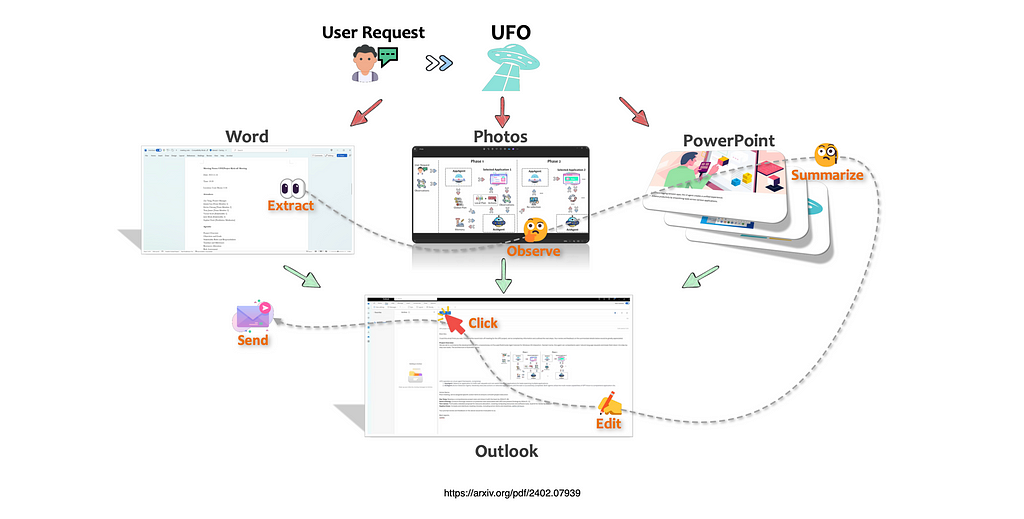

The goal of UFO as an AI agent is to effortlessly navigate and operate within individual applications, as well as across multiple apps, to complete user requests.

One powerful use-case is leveraging Vision-Language Models (VLMs) to interact with software interfaces, responding to natural language commands and executing them within real-world environments.

The development of Language Models with vision marks a shift from Large Language Models (LLMs) to Large Action Models (LAMs), enabling AI to translate decisions into real-world actions.

UFO also features an application-switching mechanism, allowing it to seamlessly transition between apps when necessary.

Vision-Language-Action models transfer web knowledge to robotic control

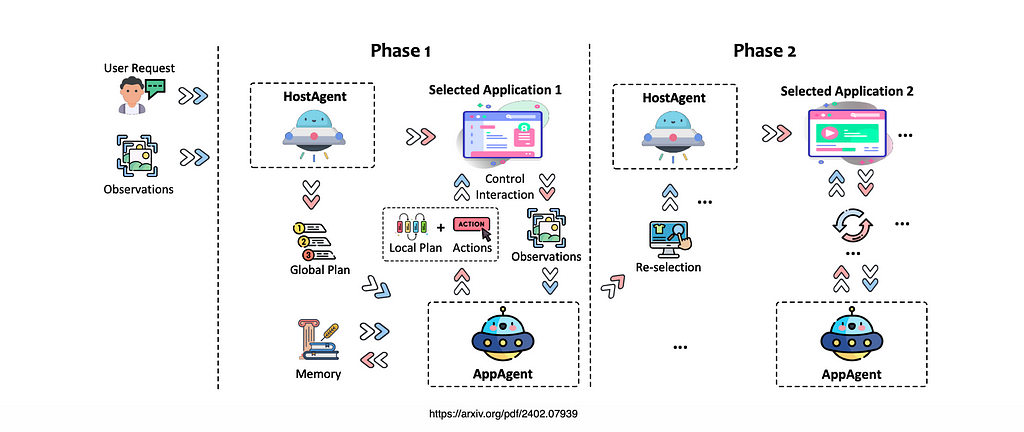

UFO Process Overview

Initial Setup — UFO provides HostAgent with a full desktop screenshot and a list of available applications. The HostAgent uses this information to select the appropriate application for the task and creates a global plan to complete the user request.

Focus and Execution — The selected application is brought into focus on the desktop. The AppAgent begins executing actions based on the global plan.

Action Selection — Before each action, UFO captures a screenshot of the current application window with annotated controls. UFO provides details about each control for AppAgent’s observation.

Below is an image with annotation examples…

Action Execution — the AppAgent chooses a control, selects an action to execute, and carries it out using a control interaction module.

After each action, UFO builds a local plan for the next step and continues the process until the task is completed in the application.

Handling Multi-App Requests

If the task requires multiple applications, AppAgent passes control back to HostAgent to switch to the next app.

The process is repeated for each application until the user request is fully completed.

To some extent, it feels like the HostAgent acts as the orchestration agent, and the AppAgents are really agents in their own right. There are no tools per say, but rather applications it is accessing.

Interactive Requests

Users can introduce new requests at any time, prompting UFO to repeat the process.

Once all user requests are completed or fulfilled, UFO ends its operation.

More On The HostAgent

The process begins with a detailed observation of the current desktop window, captured through screenshots that provide a clear view of the active interface.

Based on this observation, the next logical step to complete the task is determined, following the Chain-of-Thought (CoT) reasoning approach.

Once the appropriate application is selected, its label and name are identified and noted.

The status of the task is then assessed, with the system indicating whether to continue or finish.

Alongside this, a global plan is devised — typically a broad, high-level outline for fulfilling the user request. If this plan is visible to the user, and editable, it would make for an excellent human-in-the-loop feedback loop and future improvements.

Throughout the process, additional comments or information are provided, often including a brief summary of progress or highlighting key points for further consideration.

More On The AppAgent

The process starts with the user submitting a request to UFO, which is identical to the one received by the HostAgent.

UFO then captures screenshots of the application, divided into three types:

- a previous screenshot,

- a clean current one,

- and an annotated version showing available controls.

Alongside this, control information is collected, listing the names and types of controls available for interaction in the selected application.

The system also recalls previous thoughts, comments, actions and execution results, building a memory that mirrors the HostAgent’s own recollections.

Additionally, examples are provided to demonstrate possible action choices for the AppAgent.

With this comprehensive input, AppAgent carefully analyses the details.

First, it makes an observation, providing a detailed description of the current application window and evaluating whether the last action had the intended effect.

The rationale behind each action is also considered, as the AppAgent logically determines the next move.

Control

Once a control is selected for interaction, its label and name are identified, and the specific function to be performed on it is defined.

The AppAgent then assesses the task status, deciding whether to continue if further actions are needed, finish if the task is complete, pending if awaiting user confirmation, screenshot if a new screenshot is required for more control annotations, or App Selection if it’s time to switch to another application.

To ensure smooth progress, the AppAgent generates a local plan, a more detailed and precise roadmap for upcoming steps to fully satisfy the user request.

Throughout this process, additional comments are provided, summarising progress or highlighting key points, mirroring the feedback offered by the HostAgent.

Observation & Thought

When HostAgent is prompted to provide its Observation and Thoughts, it serves two key purposes.

First, it pushes HostAgent to thoroughly analyse the current state of the task, offering a clear explanation of its logic and decision-making process.

This not only strengthens the internal consistency of its choices but also makes UFO’s operations more transparent and easier to understand.

Second, HostAgent assesses the task’s progress, outputting “FINISH” if the task is complete.

It can also provide feedback to the user, such as reporting progress, pointing out potential issues, or answering any queries.

Once the correct application is identified, UFO moves forward with the task, and AppAgent takes charge of executing the necessary actions within the application to fulfil the user request.

Design Consideration

UFO integrates a range of design features specifically crafted for the Windows OS.

These enhancements streamline interactions with UI controls, making them more efficient, automated, and secure, ultimately improving UFO’s ability to handle user requests.

Key aspects include interactive mode, customisable actions, control filtering, plan reflection, and safety mechanisms, each of which is discussed in more detail in the following sections.

Interactive Mode

UFO allows users to engage in interactive and iterative exchanges instead of relying on one-time completions.

After finishing a task, users can request enhancements to the previous task, propose entirely new tasks, or even assist UFO with operations it might struggle with, such as entering a password.

The researchers believe the user-friendly approach sets UFO apart from other UI agents in the market, enabling it to absorb user feedback and effectively manage longer, more complex tasks.

Follow me on LinkedIn ✨✨

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.

- Get an email whenever Cobus Greyling publishes.

- COBUS GREYLING

- UFO: A UI-Focused Agent for Windows OS Interaction

- GitHub – microsoft/UFO: A UI-Focused Agent for Windows OS Interaction.